- Technology Insights Daily

- Posts

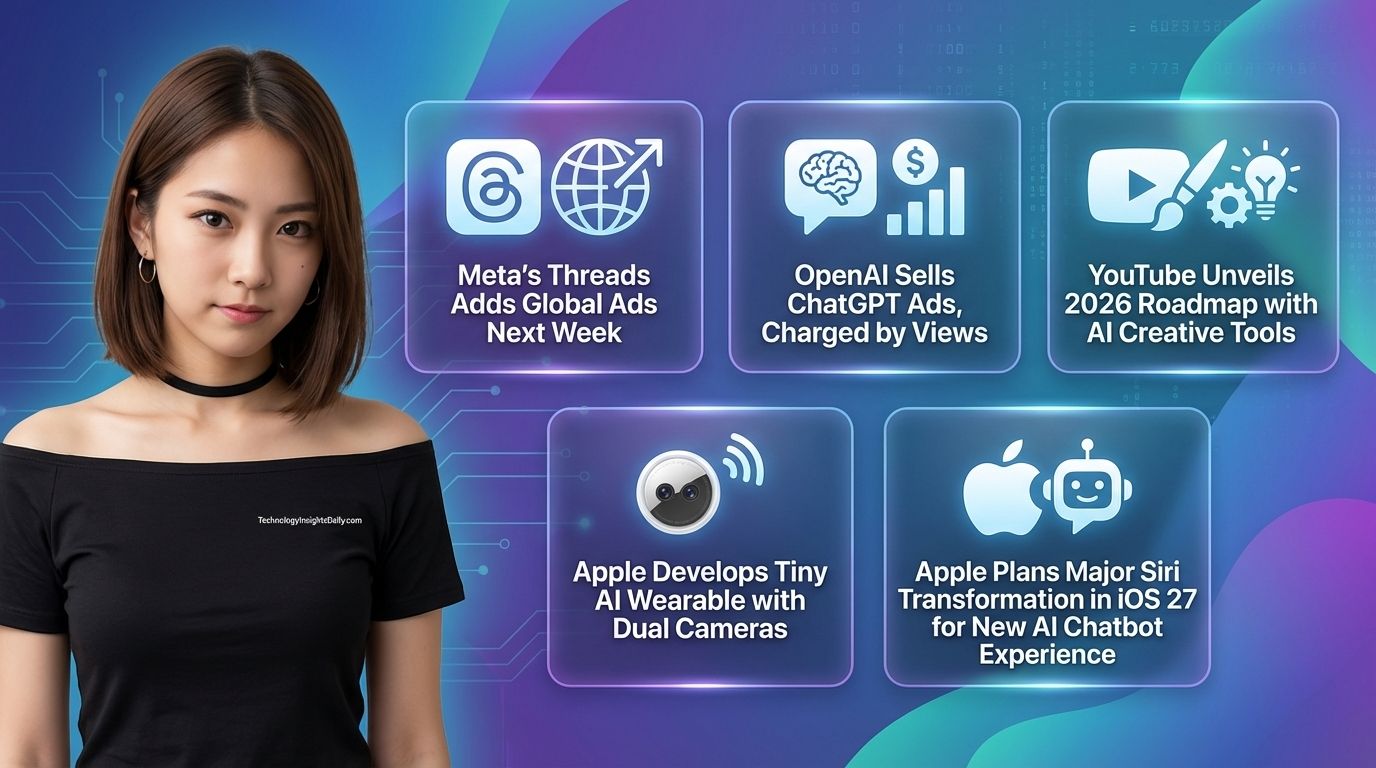

- Meta Announces Threads to Globally Roll Out Ads Next Week

Meta Announces Threads to Globally Roll Out Ads Next Week

Before the headlines flood your timeline, I spend a few quiet minutes each morning sorting signal from noise. Not every tech update deserves your attention—but some genuinely shape how we work, invest, and live.

That’s why I write TechnologyInsightsDaily. It’s my personal filter on AI breakthroughs, big-tech moves, and trends that actually matter—without the hype or fluff.

If you enjoy staying one step ahead without feeling overwhelmed, you’re exactly where you should be.

Meta is taking a big step in monetizing its social platform, Threads, by rolling out advertisements to users worldwide beginning next week. After testing ads in select markets, the company confirmed it will gradually expand ad delivery across all regions, with the full rollout potentially taking several months. This move marks Meta’s effort to turn Threads into a more sustainable business with diversified revenue streams, leveraging its existing advertising infrastructure from Facebook and Instagram. The expansion comes as Threads continues to grow its user base, making the platform more attractive to advertisers seeking reach in conversational social feeds. Industry analysts see global ads as a key move to boost engagement and strengthen Meta’s ad ecosystem, even as competition intensifies with other platforms

OpenAI is entering the advertising space by offering ad placements inside ChatGPT, adopting an impression-based pricing model rather than the traditional click-based approach. Under this strategy, advertisers pay based on how many times their ads are seen within the ChatGPT interface, which aligns with how early stages of search engine ads were introduced. OpenAI is targeting initial sales toward select marketers and may require significant early spending commitments as the platform refines its offering. The move reflects a broader effort to monetize AI tools while keeping the core conversational experience useful for users. While ads are set to appear in responses relevant to user queries, OpenAI has emphasized that paid content will be clearly labeled to maintain trust and transparency. This experiment could shape the future of monetization for large language models as they become more central to digital workflows.

YouTube has published its vision for 2026, centered around AI-powered tools designed to enhance creativity and improve monetization for creators. At the heart of the strategy is a suite of AI features that assist with content creation—such as automated dubbing, AI avatars for Shorts, and advanced analytics—along with new ways to help creators connect with audiences and streamline their workflow. In addition to creative tools, YouTube plans to introduce tighter controls to combat poor-quality AI-generated content, bolstering user trust and platform integrity. Another key element is deeper commerce integration through in-app shopping, allowing viewers to complete purchases directly within the YouTube experience. This approach reinforces YouTube’s dual focus on supporting creators while generating diversified revenue streams beyond traditional ads. The roadmap reflects YouTube’s effort to remain a central hub in the evolving creator economy by empowering both seasoned and emerging filmmakers and musicians.

Apple is rumored to be working on a compact AI-powered wearable, similar in size to its AirTag, that could transform how users interact with AI on the go. According to reports, this device will include multiple cameras, microphones, and speakers, enabling ambient sensing, visual context input, and conversational AI access without a phone. Positioned as a wearable pin or clip-on, the gadget may run Apple’s forthcoming AI assistant technology, bridging the gap between smartphones and standalone AI hardware. The design suggests Apple is exploring new form factors beyond traditional smartwatches or glasses, aiming to make AI accessible in everyday life with minimal friction. While the feature set and launch timeline remain unconfirmed, rumors point to a potential reveal in the next year, aligning with Apple’s broader push into generative AI products. If successful, this wearable could compete in the emerging space of AI assistants that accompany users throughout their day, responding to voice and visual cues in context.

Apple is gearing up for a major overhaul of Siri in iOS 27, shifting from a traditional voice assistant toward a full-fledged AI chatbot. According to multiple reports, this next-generation Siri is expected to integrate advanced generative AI capabilities, offering both voice and text interaction. The redesign would embed the conversational assistant deeply across Apple’s ecosystem—iPhone, iPad, and Mac—allowing it to understand context, handle more complex queries, and assist users more proactively. This initiative aims to bring Siri closer to competitors like ChatGPT and Google’s AI systems by leveraging cutting-edge language models and enhanced natural language understanding. Apple reportedly plans to showcase the new AI experience at its annual developer conference, with broader availability later in the year. This shift reflects broader industry trends where voice assistants are evolving into more capable and conversational AI companions that do more than execute simple commands.

Thanks for spending a few minutes with me today—it genuinely means more than you think. This newsletter grows slowly and intentionally, powered by curious readers like you.

If today’s issue sparked a thought or helped you see tech a little clearer, consider forwarding it, sharing it, or inviting a friend to subscribe.

Your support helps keep this focused, independent, and worth showing up for every day.

See you in the next issue 👋